Tired of your staff wasting countless hours pulling critical information from the internet–like contact information, shipping tracking, competitor pricing, data from portals, and more? While these tasks might seem simple, searching websites and portals and copying and pasting the information into Excel can quickly take up a lot of your team’s precious time. Plus, manually entering data into a spreadsheet is highly prone to human error. But with robotic process automation (RPA), you can streamline these repetitive tasks with automated data scraping from websites.

Automated data scraping collects data across many sources and pulls it into one spot—like an Excel spreadsheet—to eliminate errors and give you time back to work on more critical projects. Here are just some of the ways real companies are using automated data scraping:

- Gathering contact information from an online portal

- Price comparisons for competitive analysis

- Monitoring real estate prices from MLS

- Testing data for machine learning projects

- Tracking shipments from UPS, FedEx, etc.

- And many more!

How Automated Web Scraping to Excel Works

Traditionally, web data extraction involves creating custom processing and filtering algorithms to grab structured data from a website or portal. Structured data is easy to search and group together and is easily stored in an Excel file. And once you have your data, you’ll need additional scripts–or an additional tool–to transport that data to Excel or other applications in your environment.

RPA simplifies Excel automation by providing one tool to scrape, extract, and write data directly into Excel, documents, reports, or other critical business applications. Using one tool, like Fortra’s Automate, eliminates the need for custom scripts and bloating your tech stack with more tools. Plus, bringing data extraction and transformation together in one tool practically eliminates errors by moving the information you need directly to where you need it.

How to Scrape Data From a Website to Excel Using Macros

The most common way organizations automate Excel processes is through the use of VBA code. This is Microsoft’s programming language and is most commonly known as macros. Using Excel macros can increase efficiency and save time, but there are also several setbacks to be aware of. For starters, macros aren’t user friendly and require a fair amount of coding knowledge to set up automations.

They are also increasingly complicated, and the more you share a spreadsheet, the more chances your business logic will be altered, rendering your macros useless. Plus, macros can be susceptible to viruses and can be a security risk to your business. Using an RPA tool eliminates these issues by providing a user-friendly, centralized, and secure way to automate Excel processes.

7 Easy Steps to Scrape Data from a Website to Excel Automatically

There are several ways to scrape data from a web page that involve creating custom processing and filtering algorithms for each site. These require you to write additional scripts or create a separate tool to integrate the scraped data with the rest of your IT infrastructure. But with the web scraping tool from Automate, you can easily take information from webpages and put it directly into an Excel sheet to analyze—all without writing any code.

In the video below, you’ll see an Automate bot running a task that enters UPS tracking numbers into the UPS website, performs automated data scraping to get delivery tracking information, and enters it into an Excel file. After the task runs, it goes on to show how that task was built. Watch and then try it yourself with this helpful, step-by-step tutorial.

In the video above, you’ll see an Automate bot running a task that enters UPS tracking numbers into the UPS website, performs automated data scraping to get delivery tracking information, and enters it into an Excel file. After the task runs, it goes on to show how that task was built. All but step 1 are shown in the video.

Step 1: Download an Automate trial

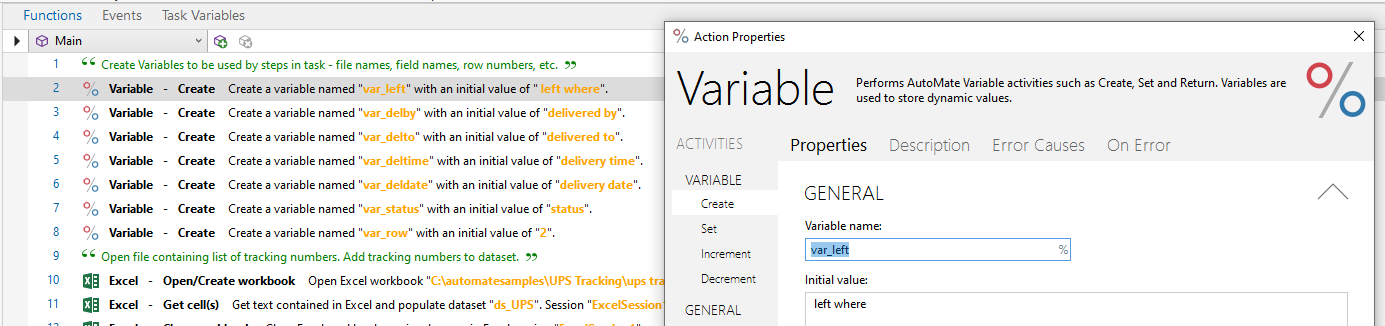

Step 2: Build the task by starting with variables. (If you need a basic primer on how to build Automate tasks, Automate Academy is a great place to learn.) In this task, you’ll add variables for file names, rows, etc. Notice that this task builder is drag and drop, with no coding required!

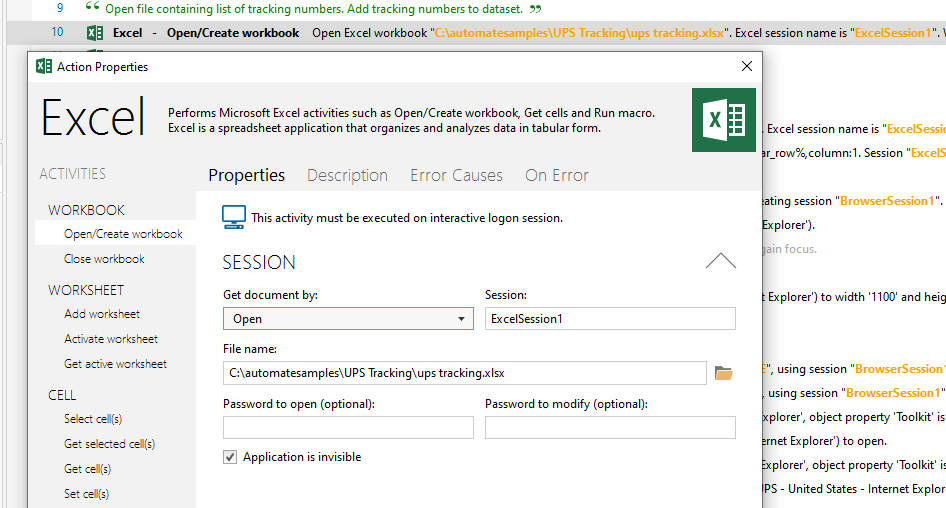

Step 3: Open Excel workbook to get tracking numbers. You’ll store this as a dataset to use later on.

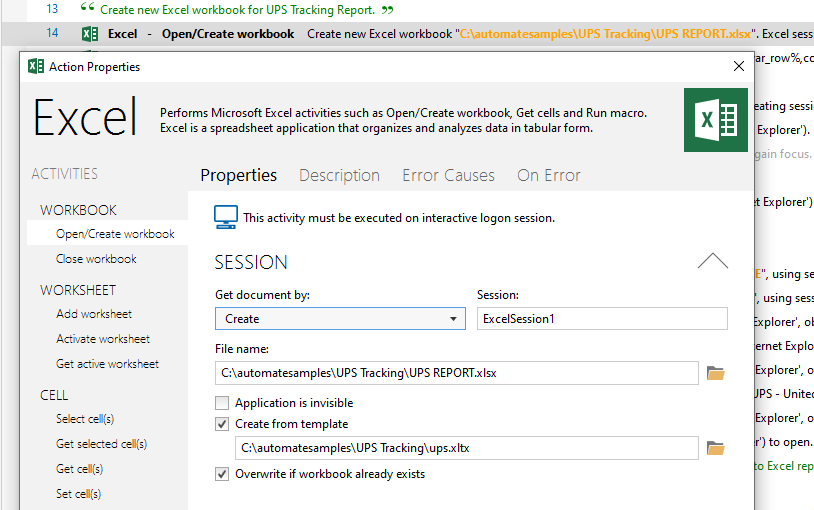

Step 4: Add a step to create a report workbook to write the dataset to.

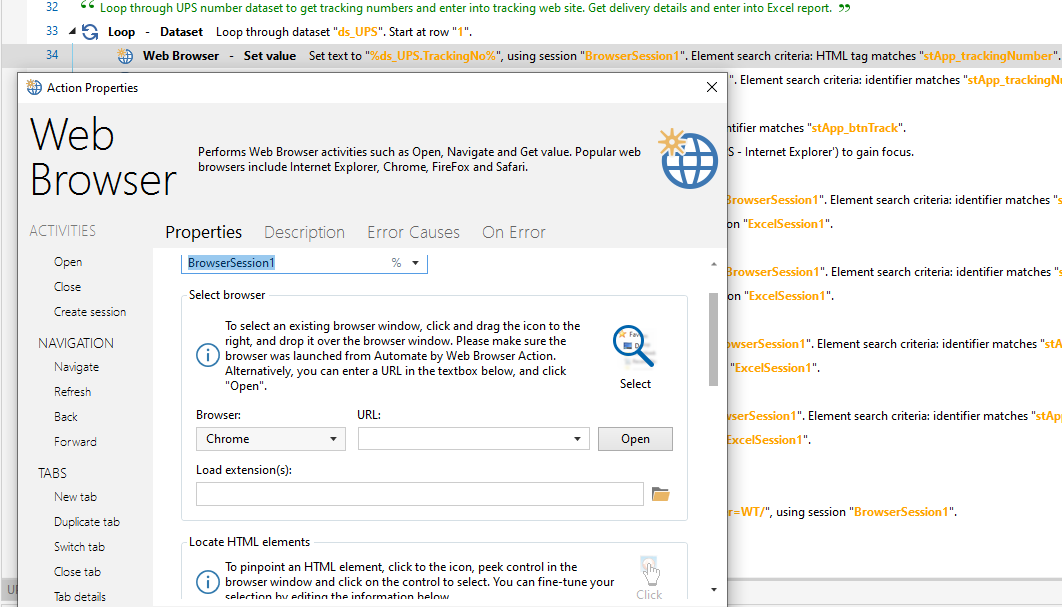

Step 5: Use the report workbook with tracking numbers and column headings in a web browser activity.

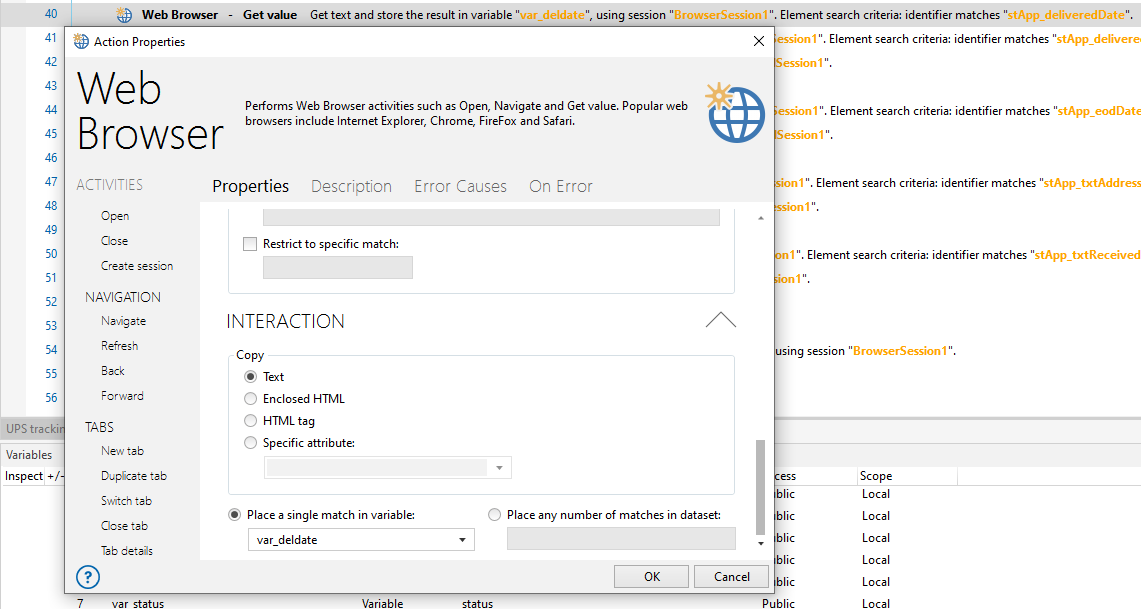

Step 6: Identify which pieces of information you need. This will include telling the Automate bot where to find the data you want scraped. Put this on a loop to go through all the tracking numbers to do automated data scraping from the UPS website into Excel.

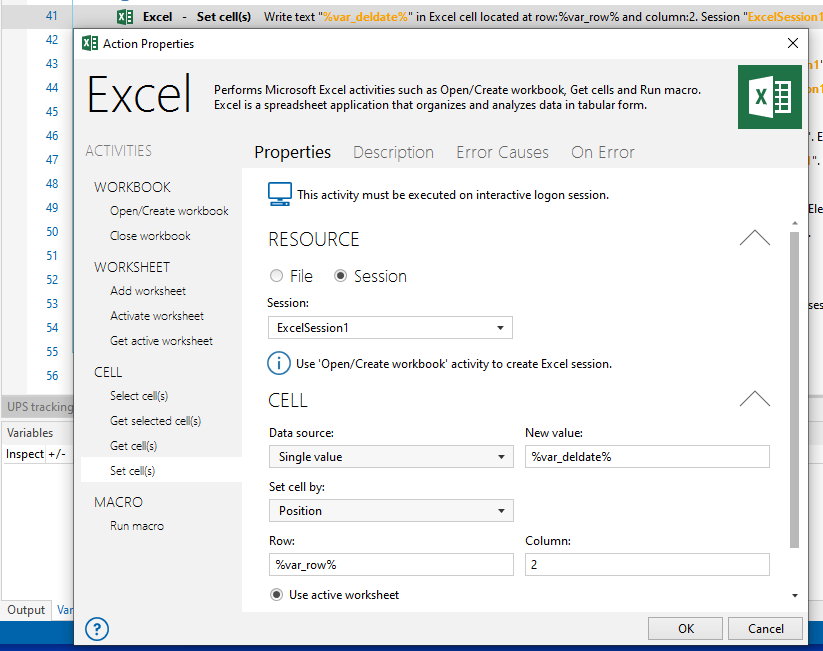

Step 7: For each piece of data you want scraped from the website, write the variable value to a cell in the workbook.

This is just one example of Excel automation. There are so many other ways Automate and Excel can work together to take manual work off your plate.

Download Automate and Try it Yourself!

Get started with Automate and see how our data extraction tools keep your critical data moving without the need for tedious manual tasks or custom script writing.